The Importance of Using Large Language Models (LLMs) Locally

In today’s digital age, language models have become an integral part of many businesses and organizations.

These models are trained on vast amounts of data to generate human-like text, which can be used for a

variety of purposes such as chatbots, language translation, content creation, and more. However, it is

crucial that we consider the importance of using large language models (LLMs) locally, rather than relying

on third-party servers.

Data Privacy and Security

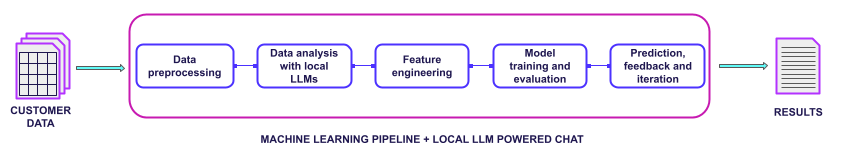

One of the primary reasons why businesses should use LLMs locally is to maintain data privacy and security. When using third-party servers, there is always a risk of data breaches or unauthorized access to sensitive information. This is particularly concerning for organizations that work with confidential client data, such as healthcare providers, financial institutions, and legal firms. By running LLMs locally, businesses can ensure that their client data remains confidential and secure, which is essential for maintaining trust and

complying with regulations.

At JoS QUANTUM, we utilize Llama 2 models developed by Meta both for internal purposes and for customer data analysis. We recognize the importance of maintaining data privacy and security, especially when working with sensitive information. To address this concern, we run our LLMs locally on our private

servers or on local machines.

Faster Processing Times

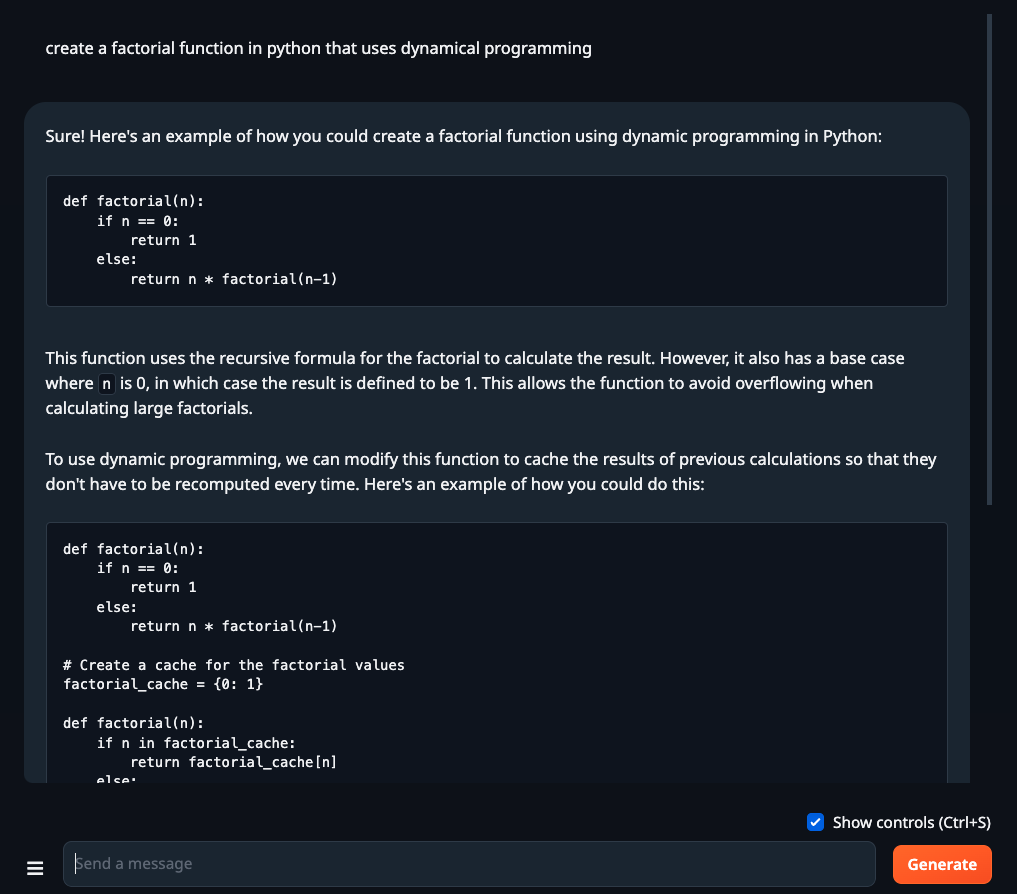

Another advantage of using LLMs locally is faster processing times. When working with large datasets, transmitting data to third-party servers can take a significant amount of time, leading to delays in processing and response times. By running LLMs locally, businesses can process data instantly, without the need for external servers. This is particularly important for applications that require real-time processing, such as chatbots or voice assistants. We use the following WebUI for the communication with LLMs.

Customization and Flexibility

Local deployment of LLMs also provides greater customization and flexibility. When working with third- party servers, businesses are limited to the pre-defined models and functionality provided by those servers. By running LLMs locally, businesses can tailor their models to specific use cases and requirements, leading to more accurate and effective results. Additionally, local deployment allows for greater control over model updates and upgrades, ensuring that businesses can maintain the latest versions of their LLMs without relying on third-party providers.

Fine-Tuning and Personalization

In the future, we plan to fine-tune LLMs on our personal quantum algorithms code base and also offer our customers fine-tuning on their own data. This will allow businesses to tailor their language models to their specific needs and requirements, leading to even more accurate and effective results. By using local compute resources, businesses can ensure that their data remains private and secure while still benefiting from the advantages of LLMs.

Cost-Effective

Finally, using LLMs locally is a cost-effective solution. Rather than paying for expensive cloud services or licensing fees, businesses can invest in local hardware and software to run their LLMs. This approach can save significant amounts of money over time, particularly for large organizations that require powerful computing resources. Additionally, local deployment allows businesses to avoid the costs associated with data transmission and storage, which can add up quickly when working with large datasets.

Conclusion

In conclusion, using LLMs locally is crucial for businesses that work with sensitive client data and require faster processing times, customization, flexibility, and cost-effective solutions. By running LLMs locally,

businesses can ensure data privacy and security, tailor their models to specific use cases, and avoid reliance on third-party servers. As we move towards a future where quantum computing and personalized language models become more prevalent, it is essential that we consider the importance of using LLMs locally.